Signal Detection Accuracy Calculator

Compare how traditional statistical methods versus machine learning models detect adverse drug reactions in real-world data.

According to the article, traditional methods detect only 13% of adverse events requiring medical intervention, while machine learning models detect 64.1%.

Results will appear here after calculation

Every year, thousands of patients experience unexpected side effects from medications that weren’t caught during clinical trials. Traditional methods of spotting these dangers-like counting how often a drug shows up alongside a symptom in reports-have been slow, noisy, and often miss real signals. Enter machine learning signal detection: a new wave of AI tools that can find hidden patterns in millions of patient records, social media posts, and insurance claims, spotting dangerous drug reactions before they become widespread.

Why Old Methods Are Failing

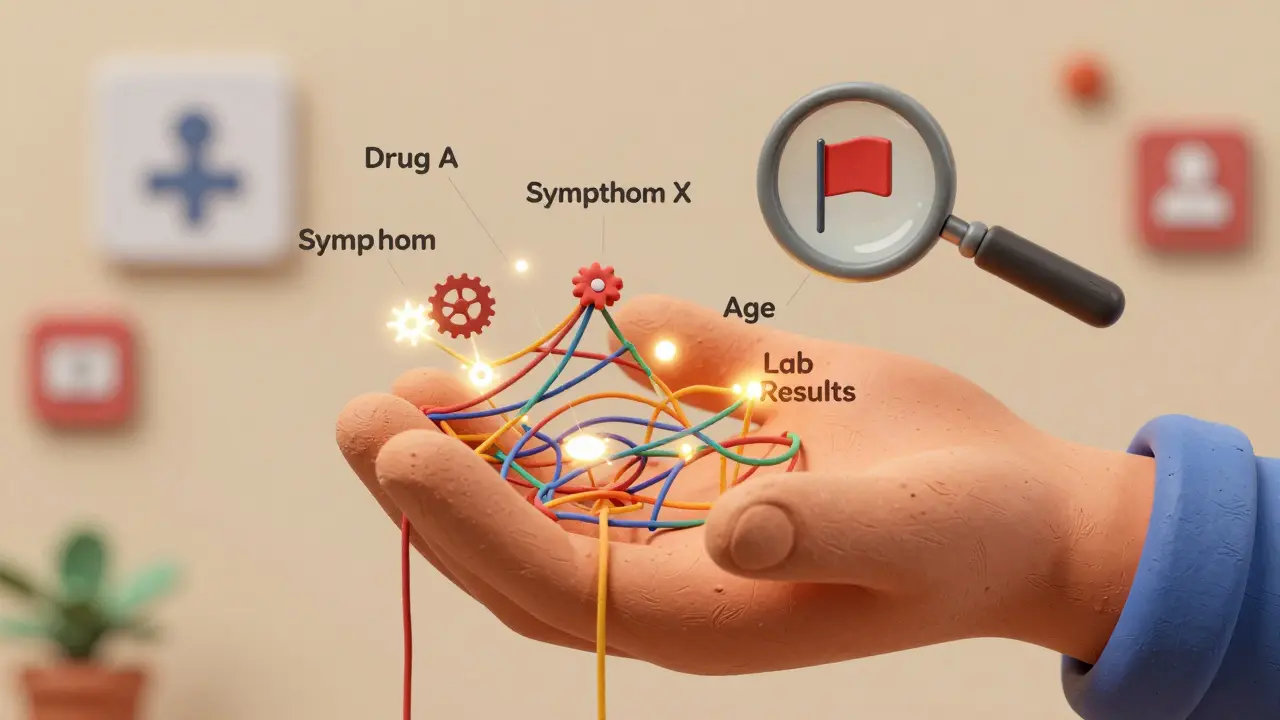

For decades, pharmacovigilance teams relied on simple statistical tools like the Reporting Odds Ratio (ROR) and Information Component (IC). These methods looked at two-by-two tables: how many times did Drug X appear with Symptom Y? But real-world data isn’t that clean. A patient might take five medications, have three chronic conditions, and report fatigue after a bad night’s sleep. Traditional tools can’t untangle that mess. They flag too many false alarms-like linking aspirin to headaches because people take it when they already have one-and miss real dangers lurking in complex combinations.That’s why the FDA’s Sentinel System and Europe’s EMA started turning to machine learning around 2018. Instead of just counting co-occurrences, these systems analyze dozens of variables at once: age, gender, dosage, timing, medical history, lab results, even how patients describe symptoms in online forums. The goal? Cut through the noise and find signals that matter.

How Machine Learning Finds Hidden Signals

The most effective tools today use ensemble methods-especially Gradient Boosting Machines (GBM) and Random Forests. These aren’t magic boxes. They’re trained on massive datasets, like the Korea Adverse Event Reporting System, which includes 10 years of cumulative reports. Each model learns what a true adverse reaction looks like by comparing millions of cases where a drug was taken and a symptom appeared, against cases where it didn’t.Here’s what sets them apart:

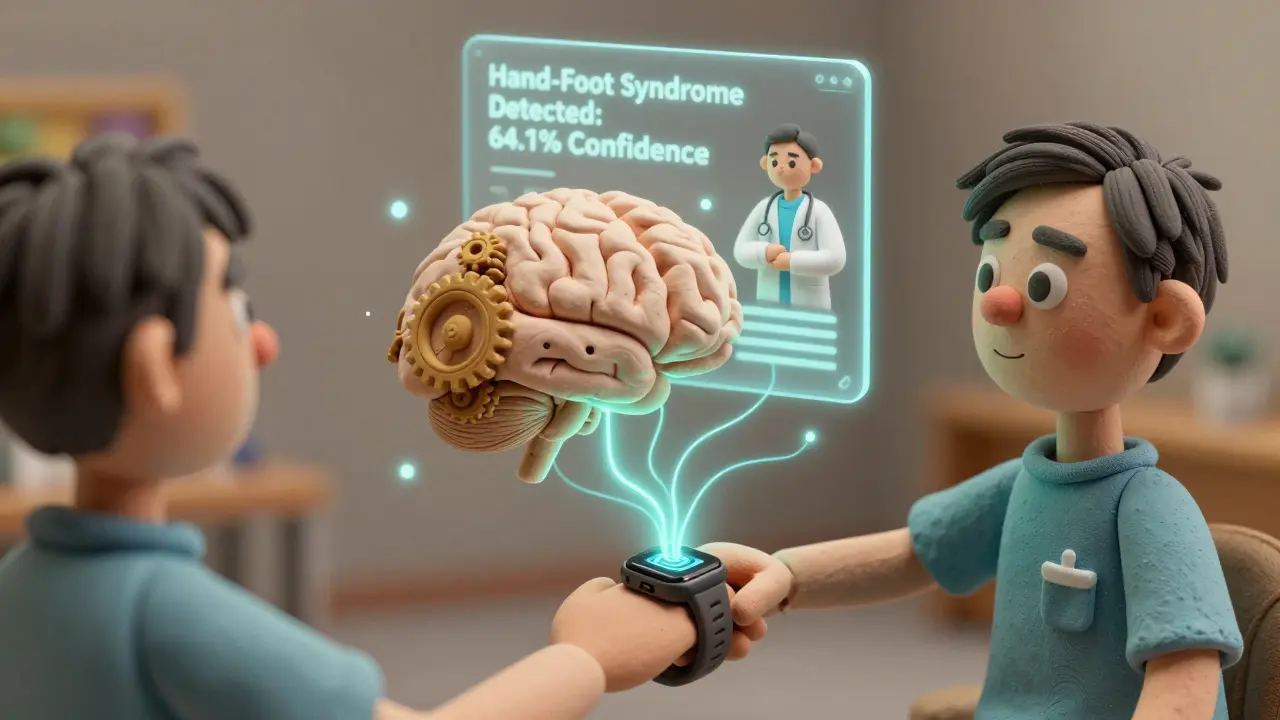

- GBM models detect 64.1% of adverse events that required medical intervention-like stopping a cancer drug or lowering a dose-compared to just 13% found by random sampling using old methods.

- One deep learning model built to detect Hand-Foot Syndrome from chemotherapy drugs correctly flagged 64.1% of cases needing action.

- These systems don’t just look at the drug and symptom. They consider whether the patient had the symptom before, what other drugs they’re on, and even seasonal trends.

In one study, GBM detected four known adverse reactions to infliximab-like lupus-like syndrome and heart failure-within the first year they appeared in the Korean database. Regulatory agencies didn’t update the drug label until two years later. The AI spotted them early.

Real-World Impact: What Happens When a Signal Is Found?

Finding a signal is only the first step. What happens next? In most cases, it’s not an immediate drug recall. It’s a call to action: monitor patients more closely, adjust dosing, or warn doctors.For example, when the Hand-Foot Syndrome model flagged a spike in skin blistering among patients on a specific chemotherapy drug, clinics started checking for early signs during routine visits. Only 4.2% of flagged cases led to stopping treatment. Most resulted in topical creams, dose adjustments, or patient education. That’s the sweet spot: catching risks early enough to prevent harm, without overreacting.

The FDA’s Sentinel System has run over 250 safety analyses since going live. In one case, it identified a link between a blood pressure drug and a rare heart rhythm issue-something that had been buried in 1.2 million reports. Within months, doctors received updated guidance. No deaths were prevented because the signal was caught before it became a crisis.

What’s New in 2025: Multi-Modal AI and Real-Time Data

The latest wave of tools doesn’t just use structured data from hospitals. They now pull from unstructured sources: patient forums, Twitter posts, wearable device logs, and even voice recordings from telehealth visits.The FDA’s Sentinel System Version 3.0, released in January 2024, uses natural language processing to read free-text adverse event reports and automatically judge their validity. If a patient writes, “I felt like my chest was being crushed after taking this pill,” the system doesn’t just count the words-it understands context, urgency, and consistency with known patterns.

By 2026, IQVIA predicts 65% of safety signals will come from at least three different data sources: electronic health records, insurance claims, and social media. This isn’t sci-fi-it’s already happening. A 2025 analysis found that patients on LinkedIn and Reddit were reporting muscle pain from statins weeks before it showed up in official reports. Machine learning models picked up on those clusters and flagged them for review.

The Catch: Black Boxes and Data Gaps

It’s not all smooth sailing. One of the biggest complaints from pharmacovigilance teams? “I don’t know why the system flagged this.” Deep learning models, especially neural networks, can be opaque. A doctor might see a signal for a rare liver injury and ask, “Why this patient and not the others?” If the model can’t explain it, regulators won’t accept it.Interpretability is now a top research priority. Teams are building explainable AI (XAI) layers on top of GBM models-adding visualizations that show which factors mattered most. Was it the dosage? A genetic marker? A recent infection? These tools are getting better, but they’re not perfect yet.

Another problem: garbage in, garbage out. If a hospital’s electronic records are messy-missing dates, wrong drug names, inconsistent coding-then even the best AI will struggle. Smaller clinics and developing countries often lack the data infrastructure to feed these systems. That’s why most successful implementations start small: testing on one drug class, like anticoagulants or diabetes meds, before scaling up.

Who’s Using This Now?

Big pharma is leading the charge. According to IQVIA, 78% of the top 20 pharmaceutical companies have integrated machine learning into their safety monitoring by mid-2024. Companies like Roche and Novartis now run automated signal detection pipelines that scan global data daily.Regulators are catching up. The EMA plans to release new guidelines for AI in pharmacovigilance by late 2025. The FDA’s AI/ML Software as a Medical Device Action Plan, launched in 2021, is now being used to evaluate commercial tools. It’s no longer a question of “if” but “how well.”

Open-source tools like those described in Frontiers in Pharmacology (2020) are helping academic labs build prototypes, but few have the polish for enterprise use. Most hospitals and drug companies still rely on commercial platforms from vendors like Oracle, IBM Watson Health, or ArisGlobal.

The Road Ahead

Machine learning signal detection isn’t replacing human experts-it’s giving them superpowers. Pharmacovigilance professionals are no longer drowning in reports. They’re focusing on the signals that matter, with data-driven context to back up their decisions.By 2028, the global pharmacovigilance market is expected to hit $12.7 billion, with AI driving nearly half the growth. The tools will get faster, smarter, and more integrated. We’ll see real-time alerts triggered when a patient’s wearable detects abnormal heart rhythms after starting a new drug. We’ll see AI cross-referencing genetic data with adverse event reports to predict who’s at highest risk.

But the core principle won’t change: technology should serve safety, not replace judgment. The best systems combine AI’s speed with human experience. A model can flag a signal. A trained pharmacist decides what to do about it.

What You Need to Know

If you’re in healthcare, pharma, or even a patient curious about drug safety:- Machine learning is now the most powerful tool for spotting hidden drug dangers.

- GBM and Random Forest are the gold-standard algorithms, not deep learning alone.

- False positives are down, but interpretability remains a challenge.

- Real-world data-EHRs, claims, social media-is replacing old spontaneous reports.

- Regulators are adapting, but you won’t see AI-driven recalls tomorrow. You’ll see better warnings, earlier.

This isn’t about replacing doctors or pharmacists. It’s about giving them the clarity they’ve been missing for decades. And that’s a win for every patient who takes a pill.

How accurate are machine learning models in detecting adverse drug reactions?

Gradient Boosting Machine (GBM) models have demonstrated accuracy rates of around 0.8 in distinguishing true adverse drug reactions from false signals, based on validation studies using real-world datasets like the Korea Adverse Event Reporting System. These models outperform traditional statistical methods by detecting 64.1% of adverse events requiring medical intervention, compared to just 13% found using random sampling of old reports.

What’s the difference between machine learning and traditional signal detection?

Traditional methods like Reporting Odds Ratio (ROR) only look at simple two-way associations between a drug and a symptom. Machine learning considers dozens of variables at once-patient age, dosage, medical history, other medications, lab results, and even how symptoms are described in patient forums. This reduces false positives and uncovers complex patterns traditional tools miss.

Are machine learning tools being used by regulators like the FDA?

Yes. The FDA’s Sentinel System, which uses machine learning for post-market drug safety monitoring, has conducted over 250 safety analyses since full implementation. Version 3.0, released in January 2024, uses natural language processing to automatically interpret adverse event reports without human input. The European Medicines Agency (EMA) is also developing formal guidelines for AI use in pharmacovigilance, expected by late 2025.

Can machine learning detect adverse events before they’re officially recognized?

Yes. In a 2022 study, machine learning models detected four known adverse reactions to infliximab-such as heart failure and lupus-like syndrome-within the first year they appeared in the Korean adverse event database. Regulatory labels were not updated until two years later. The AI spotted the signals months or even years before traditional methods could confirm them.

Why aren’t all drug companies using machine learning yet?

Implementation is complex. It requires large, high-quality datasets, specialized data science skills, and integration with existing safety databases. Smaller companies often lack resources. There’s also a learning curve: pharmacovigilance professionals typically need 6-12 months to become proficient. Many start with pilot projects on one drug class before scaling up.

Is patient data safe when used in machine learning models?

Data privacy is a major concern, and strict protocols are in place. Systems like the FDA’s Sentinel use de-identified, aggregated data. Patient names, addresses, and direct identifiers are removed. Models are trained on statistical patterns, not individual records. Still, concerns about bias, data misuse, and transparency remain active topics in regulatory discussions.

What’s the biggest limitation of machine learning in this field?

The biggest limitation is interpretability. Many powerful models, especially deep learning systems, act like black boxes-they find signals but can’t clearly explain why. This makes it hard for regulators and clinicians to trust or act on the results. Research into explainable AI is ongoing, but it’s not yet solved.

How long does it take to implement a machine learning signal detection system?

For large pharmaceutical companies, full enterprise-wide implementation typically takes 18 to 24 months. This includes data integration, model training, validation, staff training, and regulatory alignment. Smaller organizations often start with pilot projects on a single drug class, which can be set up in 6-9 months.